We live in a world where incidents – both minor and major – occur with increasing frequency. These incidents may impact on our organisations, our stakeholders, and our broader communities – in some cases they may threaten the very survival of our organisations.

Our Incident Management webinars held in March-April 2023 gave a practical overview of how to manage incidents from an Enterprise Risk Management perspective. In this blog, we'll discuss the audience polls and the questions asked at the webinar.

Poll results

Results for our surveys were quite consistent across regions, so the data has been presented together.

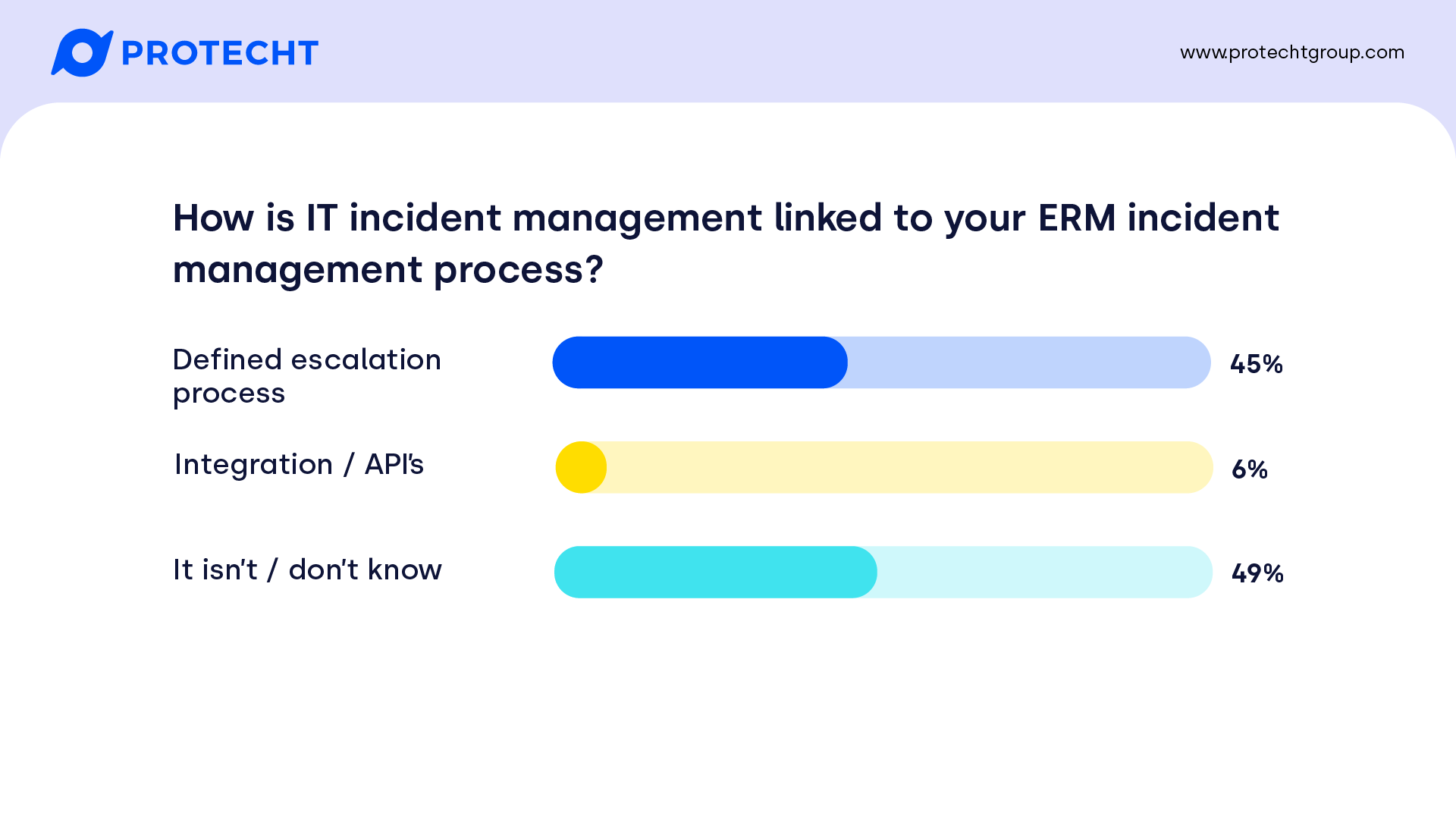

Almost half of our participants had a defined process that escalated their IT incidents into their ERM platform – great to see, and a little higher than we had expected. Of course, this is balanced by those that don’t have any known process that links incidents at the enterprise level. As we’ll see, this overlaps with those that use ERM platforms to capture incidents more generally.

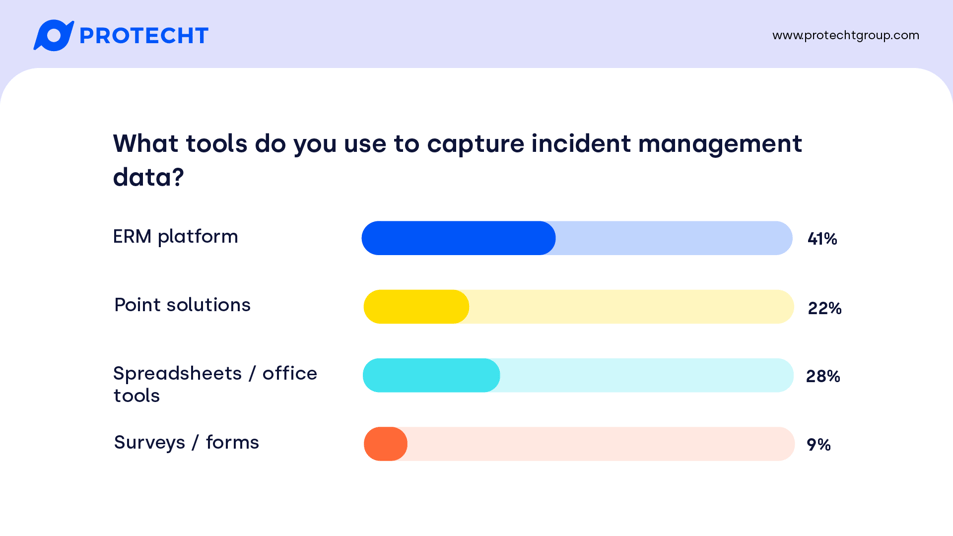

Spreadsheets and office tools are simple to start collecting data, but the lack of audit trail and version control, let alone the manual process, can become burdensome. Their continued use is not surprising, but on a positive note is a little less than I was expecting. Similarly, surveys and forms can be developed to be slick and good interfaces for the front line to report incidents – but are usually poor at enabling effective escalation, automation and follow up. Point solutions, such as specific tools to manage health and safety incidents are usually designed to meet the needs of a specific team, but may lead to duplication or inconsistencies if they need to be aggregated to Executive or Board for reporting.

Questions

Q1: Is there more to be learned during a disruption than after it?

Q2: Are you blurring the lines between incident management and problem management, from an ITIL perspective? Root Cause Analysis would actually occur in problem management.

Q3: You didn't touch on the compliance review to determine potential deemed or significant breach and subsequent regulatory reporting? Where do you think this sits in the process?

Q4: Risk professionals are often not invited to incident response/management/de-briefs – but should they be? If so, how do we as Risk Professionals initiate this?

Q5: What language should we be using to differentiate between an incident vs IT incident?

Q6: What are the pain points in Incident Management for which Internal Audit teams could focus their efforts to help the business?

Q7: Don't all systems causes really come down to people and process?

Q8: Any suggestions for useful IT risk taxonomies?

Q9: On your risk dashboard you reflected inherent and residual impact ratings. Is likelihood reflected elsewhere or is that less relevant in incident management?

Q10: Do you have any suggestions for what sort of metrics to put around the incident management process?

Q1: Is there more to be learned during a disruption than after it?

Certainly while you are in the thick of it (usually no matter what type of incident), that is when the learnings are most salient. The problem is that often this is when 'all hands on deck' are required, and there is little time for someone to be documenting what is happening or creating a detailed timeline, or drawing connections between causes and outcomes.

After the event, sometimes memory gets fuzzy, the timing of events get confused, and details get missed. If you are able to allocate someone to this role – by setting up a good incident management framework that includes this role – then documenting observations and learnings on the way through can produce more accurate data which should result in a better outcome.

Q2: Are you blurring the lines between incident management and problem management, from an ITIL perspective? Root Cause Analysis would actually occur in problem management.

I think we've run into a semantics problem (risk people love jargon!) rather than a functional one.

Under ITIL, we have the following definitions:

- Incident – An unplanned interruption to a service, or reduction in the quality of a service.

- Problem - A cause or potential cause of one or more incidents.

Under an ERM framework, we consider the following definition of an incident:

- Incident – A risk event that results in, or could have resulted in, an impact on objectives.

We further break down risks into the components of causes, risk events, and impacts. Once you do that, problems and causes (which is in the ITIL definition of problem) are fairly synonymous in incident management processes. Perhaps a minor distinction is that the root cause analysis might be initiated when an incident has occurred, but you haven't yet identified the problem / cause - that's the purpose of conducting the root cause analysis.

Q3: You didn't touch on the compliance review to determine potential deemed or significant breach and subsequent regulatory reporting? Where do you think this sits in the process?

As we covered the integration between IT incidents and a broader ERM incident process, we didn't delve into compliance specifically. However, the question can be broadened to cover any type of incident.

From an ERM perspective, we would expect the impacts of any incident are reviewed and captured, which would include assessing any compliance impact. Depending on your specific process and the type of incident, you may have different escalation mechanisms. This might include:

- An initial assessment by the person who has identified the incident on the possibility of a breach so that it can immediately be escalated

- Triaged by a first responder, who can then escalate or assign to an individual who has the requisite expertise to assess against specific regulatory or other compliance requirements

Some of the challenges in the process is when there are regulatory timeframes for specific types of incidents, and data breaches in particular often fall into that camp across regions and sectors. For some critical infrastructure sectors it can be 12 hours, or 72 hours for some financial service providers in some regions. If you are bound by these timelines, then you need to ensure that the right people are notified immediately during a response to make at least an initial assessment in order to meet these guidelines.

My suggestion is that you capture in the initial reporting process of your incident management framework (IT specific or enterprise wide) an escalation mechanism to the appropriate compliance, risk or legal team if there is a suspected breach of any regulatory or compliance obligation.

Q4: Risk professionals are often not invited to incident response/management/de-briefs – but should they be? If so, how do we as Risk Professionals initiate this?

I've been there! The answer to the question may vary slightly depending on whether you are a Line 1 IT risk specialist, sit in Line 2 (whether specialising in technology or not), and the risk maturity of your organisation. Let's start with Line 2.

It may be a matter of addressing risk culture of the organisation, and how Line 2 can support the business. I'd suggest asking key stakeholders whether there is any particular reason you are not invited to participate. Also think about the value you expect to be able to provide (whether immediate, or in later risk assessment processes by being a fly on the wall), and explain the reasons you think you should attend. If you are in Line 2, you shouldn't be asked to address the problem afterwards – this should sit with Line 1.

While there is a different focus for Line 1, the advice above mainly applies – especially if in a risk assessment you are expected to appreciate how the incident (or what was learned from it) affects the assessment. It may also depend if your manager is part of incident response. If this is the case, you may need to discuss with them the value of you attending if you have specific expertise that could be drawn upon.

Q5: What language should we be using to differentiate between an incident vs IT incident?

This can be difficult. It's not elegant, but I prefer to use 'incident' to be all-encompassing, and then 'IT' incident to those that fit into your pre-defined IT incident ticketing / escalation process. Pushing that message consistently to frontline staff helps them identify and report all types of incidents. Of course, IT specialists can still be using the word 'incident' to mean IT specific incidents. Similarly, safety specialists may use the word incident synonymously with injury.

I’ll admit that may not be a very satisfying answer – the key is to work with your stakeholders to determine the language that works for you.

Q6: What are the pain points in incident management where internal audit teams could focus their efforts to help the business?

Depending on the scope of the audit, internal audit could contribute by assessing and providing assurance over the effectiveness of the incident management process, which could include assessing the integration with other disciplines and processes, such as BCP and Crisis Management, Enterprise Risk Management, Vendor Risk Management, and so on.

Risk management teams could similarly contribute. They should be considering how information captured in incidents is aligned with other data capture so that it can be used by those other teams. We are finding this integration to be a big part of the puzzle for Operational Resilience, with teams across the organisation using the same taxonomies and language. If risk teams are called in to act as facilitators for root cause analysis, they are well placed to ensure that this knowledge sharing takes place.

Imagine the wasted knowledge of an incident that isn’t used to:

- Update risk assessments with new information gained that might prompt updates to controls

- calling out what did and didn’t work in lower-level incident response that could also be used to update Business Continuity Plans

- Revise vendor due diligence processes to avoid similar vendor-related incidents

Q7: Don't all systems causes really come down to people and process?

This could become a circular debate! For our customers that have cause taxonomies, we often find they roll up to four categories: People, Systems, Process and External, usually with a handful of specific causes underneath them. Ultimately this classification is only useful if it is (or could be) actionable.

You could argue that if there was a system problem, perhaps a process wasn’t followed that led to the system failure, or a person conducted a malicious act, resulting in system failure. One of the messages in the webinar was to consider the contributing causes, rather than find a single root cause. Ultimately, the classification is intended to answer the question “What should we do about it?” You might have an incident where an update to training is warranted to address the people side, alongside a change to systems that also reduces the likelihood of reoccurrence.

As a counterpoint to your comment about causes coming down to people, I always challenge others to think beyond people as a root cause. If someone made an error or a sub-optimal decision, what environment did we put them in, and how much did that environment (including the systems they use) contribute to their behaviour? It might be negligible and perhaps it is just a people problem, but we need to make sure we are assessing as many relevant contributing factors as possible.

Q8: Any suggestions for useful IT risk taxonomies?

We are advocates of using taxonomies at Protecht. We recommend them for risks, causes, impacts, and controls. While we see it rarely, we also suggest them for processes as well.

NIST recently released a document on Adversarial Machine Learning including taxonomies if it’s of interest – with the caveat that I haven’t reviewed it to recommend its content. There have been instances where Protecht have reviewed taxonomies that we don’t fully agree with (usually mixing up the different types, such as impacts or failed controls recorded as risks).

One suggestion is to ensure there is alignment between any IT risk taxonomies you have, and your Enterprise Risk Management framework. You may need those more detailed taxonomies if you want to demonstrate adherence to specific IT frameworks, but consider whether they ‘ladder up’ to an IT category in your ERM framework. This can help with enabling incidents to be viewed with the enterprise lens (using the entire taxonomy), not just an IT one.

Q9: On your risk dashboard you reflected inherent and residual impact ratings. Is likelihood reflected elsewhere or is that less relevant in incident management?

This is referring to our Risk In Motion dashboard, below. This dashboard highlights the risks that the organisation or business unit faces, and links it all the other risk information you may collect related to that risk, including incidents.

The inherent and residual risk ratings are not related directly to the individual incidents. However by bringing together all of the risk information, it can help provide assurance, inform decision makers, or prompt additional questions of management. For example, if the residual risk rating was low but there had been recent high impact incidents, the Board or Executive may want to ask what has been done since the incidents occurred to warrant the low residual risk rating.

Q10: Do you have any suggestions for what sort of metrics to put around the incident management process?

One thing to keep in mind when defining metrics are incentives. If you make the number of incidents reported a target, guess what happens... incidents get swept under the rug. Consider whether the metrics are key performance indicators (which usually means someone is accountable to keep them within target) or are key risk indicators (triggers to take action outside of a threshold, not a target). Monitoring the latter and treating them as ‘health’ metrics for the incident process enables stakeholders to collectively consider improvements to the process.

Here are a few targets or metrics I’ve seen and avoided or changed when I’ve encountered them:

- Number of incidents that involved an individual / business unit (this usually turns into a blame game)

- Target for closing an incident within a defined timeframe (this can prompt the minimal effort required and may not address root cause)

A few that I’ve used:

- Formal update on incident response provided at least every 30 days (which can include when there is no update or legitimate explanation of other priorities)

- Time between identification and formal escalation.

Changes in metrics over time can be as valuable or perhaps more valuable than point in time metrics. The actual amount of time taken to resolve incidents might be within tolerance, but if it has been increasing consistently over a period of time, you may want to investigate.

Whatever metrics you choose, ensure they drive the right behaviour while providing actionable insights.Next steps: watch our incident management webinar

We live in a world where incidents – both minor and major – occur with increasing frequency. These incidents may impact on our organisations, our stakeholders, and our broader communities – in some cases they may threaten the very survival of our organisations.

In this webinar, Protecht’s Research and Content Lead Michael Howell and Chief Research and Content Officer, David Tattam will provide you with an overview of incident management, and how an integrated approach can improve your operational resilience.